SF 6th And Mission Enviornment: 3 Source cameras (Shown in Red), 100 evaluation Cameras (Shown in green).

Recent implicit neural representations have shown great results for novel-view synthesis. However, existing methods require expensive per-scene optimization from many views hence limiting their application to real-world unbounded urban settings where the objects of interest or backgrounds are observed from very few views.

To mitigate this challenge, we introduce a new approach called NeO 360, Neural fields for few-view view synthesis of outdoor scenes. NeO 360 is a generalizable method that reconstructs 360° scenes from a single or a few posed RGB images. The essence of our approach is in capturing the distribution of complex real-world outdoor 3D scenes and using a hybrid image- conditional tri-planar representation that can be queried from any world point. Our representation combines the best of both voxel-based and bird’s-eye-view (BEV) representations and is more effective and expressive than each. NeO 360’s representation allows us to learn from a large collection of unbounded 3D scenes while offering generalizability to new views and novel scenes from as few as a single image during inference.

We demonstrate our approach on our proposed challenging 360° unbounded dataset, called NeRDS360 and show that NeRO outperforms state-of-the art generalizable methods for novel-viewsynthesis while also offering editing and composition capabilities.

NeRDS 360: "NeRF for Reconstruction, Decomposition and Scene Synthesis of 360° outdoor scenes” dataset comprising 75 unbounded scenes with full multi-view annotations and diverse scenes for generalizable NeRF training and evaluation.

SF 6th And Mission Enviornment: 3 Source cameras (Shown in Red), 100 evaluation Cameras (Shown in green).

SF Grant and California Enviornment: 5 Source cameras (Shown in Red), 100 evaluation Cameras (Shown in green).

SF VanNess Ave and Turk Enviornment: Training distribution (Shown in Blue), Evaluation Cameras Distribution (Shown in green).

SF 6th and Mission Enviornment: Training distribution (Shown in Blue), Evaluation Cameras Distribution (Shown in green).

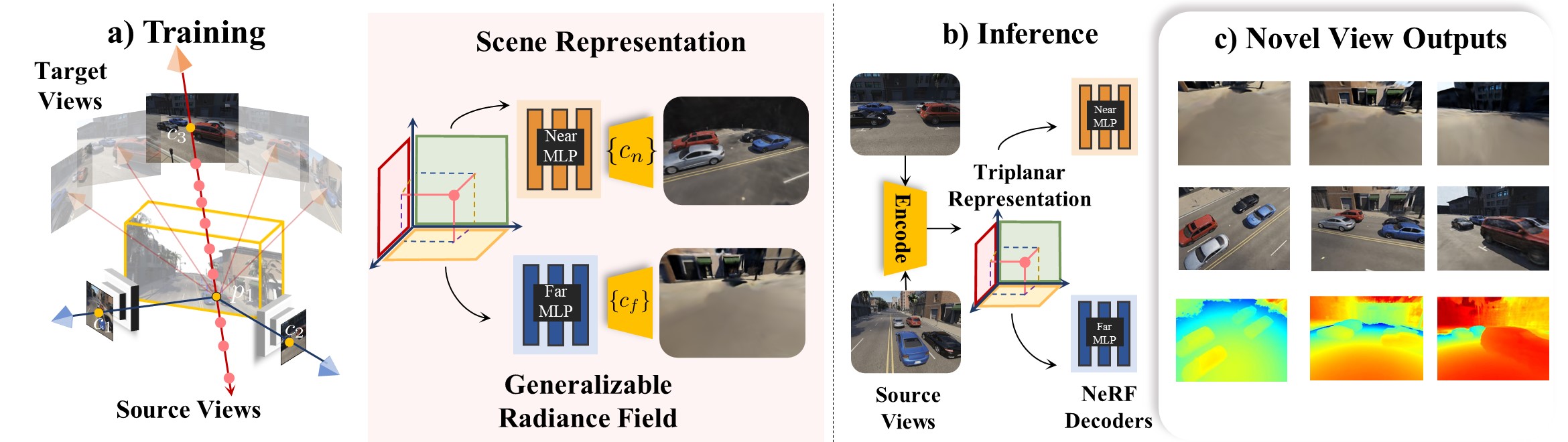

Overview: a) Given just a single or a few input images from a novel scene, our method reconstructs and renders new 360° views of complex unbounded outdoor scenes b) We achieve this by constructing an image-conditional triplane representation to model the 3D surrounding from various perspectives. c) Our method generalizes across novel scenes and viewpoints for complex 360° outdoor scenes.

NeO 360 Architecture: Our method effectively uses local features to infer an image-conditional triplanar representation for both backgrounds and foregrounds. These triplanar features are obtained after orthogonally projecting positions (x) into each plane and bilinearly interpolating feature vectors. Dedicated NeRF decoder MLPs are used to regress density and color each for foreground and backgrounds

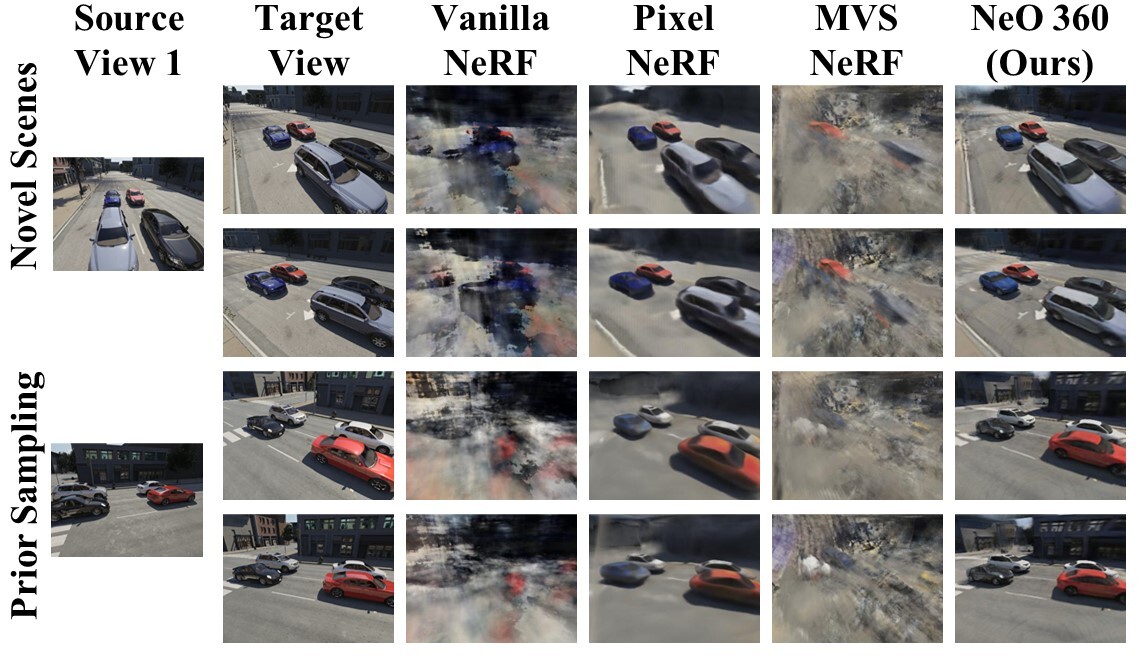

Our method excels in novel-view synthesis from 3 source views, outperforming strong generalizable NeRF baselines. Vanilla NeRF struggles due to overfitting on these 3 views. MVSNeRF, although generalizable, is limited to nearby views as stated in the original paper, and thus struggles with distant views in this more challenging task whereas PixelNeRF’s renderings also produce artifacts for far backgrounds.

Qualitative 3-view view synthesis results: Comparisons with baselines.

Qualitative 3-view view synthesis results: Close-up comparison with PixelNeRF